11. Vector Spaces of Least Squares and Linear Equations

Michael Friendly, Georges Monette, John Fox, Phil Chalmers

2024-11-24

Source:vignettes/aB-data-beta.Rmd

aB-data-beta.RmdThis vignette illustrates the relationship between simple linear regression via least squares, in the familiar data space of and an equivalent representation by means of linear equations for the observations in the less familiar space of the model parameters in the model .

In data space, we probably all know that the least squares solution can be visualized as a line with intercept and slope . But in space, the same solution is the point, .

There is such a pleasing duality here:

- A line in data space corresponds to a point in beta space

- A point in data space corresponds to a line in beta space

But wait, there’s one more space: observation space, the n-dimensional space for n observations. These ideas have a modern history that goes back to Dempster (1969). It was developed in the context of linear models by Fox (1984) and Monette (1990). Some of these geometric relations are explored in a wider context in Friendly et al. (2013).

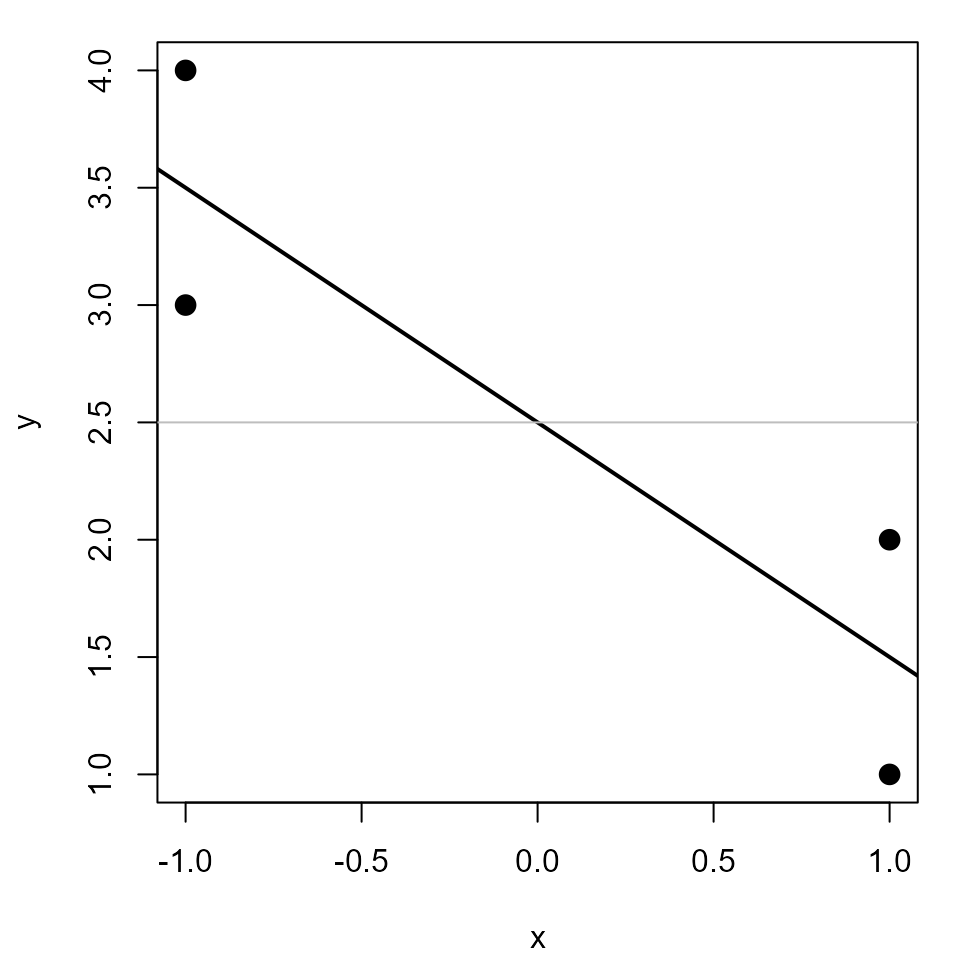

Data space

We start with a simple linear regression problem, shown in data space.

x <- c(1, 1, -1, -1)

y <- 1:4Fit the linear model, y ~ x. The intercept is

b0 = 2.5 and the slope is b1 = -1.

(mod <- lm(y ~ x))

##

## Call:

## lm(formula = y ~ x)

##

## Coefficients:

## (Intercept) x

## 2.5 -1.0Plot the data and the least squares line.

par(mar=c(4,4,1,1)+.1)

plot(y ~ x, pch=16, cex=1.5)

abline(mod, lwd=2)

abline(h = coef(mod)[1], col="grey")

A plot showing 4 points in data space and the linear regression line

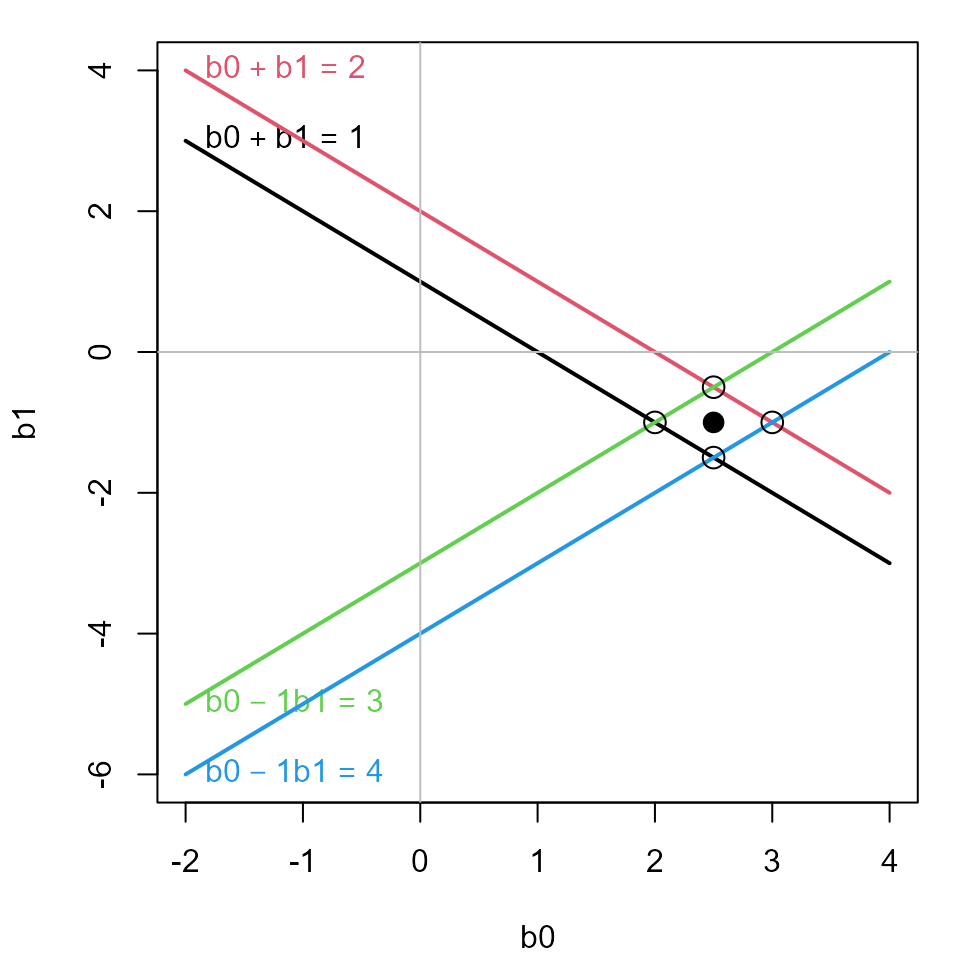

Linear equation () space

This problem can be represented by the matrix equation, .

X <- cbind(1, x)

printMatEqn(y, "=", X, "*", vec(c("b0", "b1")), "+", vec(paste0("e", 1:4)))

## y X

## 1 = 1 1 * b0 + e1

## 2 1 1 b1 e2

## 3 1 -1 e3

## 4 1 -1 e4Each equation is of the form . The least squares solution minimizes .

We can also describe this a representing four equations in two

unknowns, c("b0", "b1").

showEqn(X, y, vars=c("b0", "b1"), simplify=TRUE)

## b0 + b1 = 1

## b0 + b1 = 2

## b0 - 1*b1 = 3

## b0 - 1*b1 = 4Each equation corresponds to a line in

space. Let’s plot them. plotEqn draws a point at the

intersection of each pair of lines — a solution for that pair of

observations.

plotEqn(X, y, vars=c("b0", "b1"), xlim=c(-2, 4))

## b0 + b1 = 1

## b0 + b1 = 2

## b0 - 1*b1 = 3

## b0 - 1*b1 = 4Each point in data space corresponds to a line in “beta” space. The figure shows the four lines corresponding to the points in the previous figure.

In this space, not all observation equations can be satisfied simultaneously, but a best approximate solution can be represented in this space by the coefficients of the linear model , where the intercept is already included as the first column in .

plotEqn(X, y, vars=c("b0", "b1"), xlim=c(-2, 4))

## b0 + b1 = 1

## b0 + b1 = 2

## b0 - 1*b1 = 3

## b0 - 1*b1 = 4

solution <- lm( y ~ 0 + X)

loc <- coef(solution)

points(x=loc[1], y=loc[2], pch=16, cex=1.5)

The LS solution is shown by the black point, corresponding to .

Observation space

There is also a third vector space, one where the coordinate axes

refer to the observations,

,

with data

.

The

-length

vectors in this space relate to the variables y and

predictors x1, x2 … . Here,

x0 is the unit vector, J(n), corresponding

to the intercept in a model. For

observations, this space has

dimensions.

In the case of simple linear regression, the fitted values,

correspond to the projection of

on the plane spanned by

,

or yhat <- Proj(y, c(bind(x0, x1))).

In this space, the vector of residuals, is the orthogonal complement of , i.e., . Another geometrical description is that the residual vector is the normal vector to the plane.

This space corresponds to the matrix algebra representation of linear regression,

In fact, the least squares solution can be derived purely from the requirement that the vector is orthogonal to the vector of residuals, , i.e., . (The margins of this vignette are too small to give the proof of this assertion.)

Observation space can be illustrated in the vector diagram developed

below, but only in

dimensional space, for an actual data problem. Here, we create

x0, x1 and y for a simple

example.

O <- c(0, 0, 0) # origin

x0 <- J(3) # intercept

x1 <- c(0, 1, -1) # x

y <- c(1, 1, 4) # y

y <- 2 * y / floor(len(y)) # make length more convenient for 3D plotThis implies the following linear equations, ignoring residuals.

X <- cbind(x0, x1) # make a matrix

showEqn(X, y, vars=colnames(X), simplify=TRUE)

## x0 = 0.5

## x0 + x1 = 0.5

## x0 - 1*x1 = 2To display this in observation space,

- First create a basic 3D plot showing the coordinate axes.

- Then, use

vectors3d()to draw the vectorsx0,x1andy. - The plane spanned by

x0, andx1can be specified as as the normal vector orthogonal to both, using the newmatlib::xprod()function. - Finally, we use

Proj()to find the projection ofyon this plane.

win <- rgl::open3d()

# (1) draw observation axes

E <- diag(3)

rownames(E) <- c("1", "2", "3")

vectors3d(E, lwd=2, color="blue")

# (2) draw variable vectors

vectors3d(t(X), lwd=2, headlength=0.07)

vectors3d(y, labels=c("", "y"), color="red", lwd=3, headlength=0.07)

# (3) draw the plane spanned by x0, x1

normal <- xprod(x0, x1)

rgl::planes3d(normal, col="turquoise", alpha=0.2)

# (4) draw projection of y on X

py <- Proj(y, X)

rgl::segments3d(rbind( y, py)) # draw y to plane

rgl::segments3d(rbind( O, py)) # origin to py in the plane

corner( O, py, y, d=0.15) # show it's a right angle

arc(y, O, py, d=0.2, color="red")This plot is interactive in the HTML version. Use the mouse wheel to expand/contract the plot. Drag it to rotate to a different view.

You can also spin the plot around it’s any axis or create a movie, but that isn’t done in this vignette.

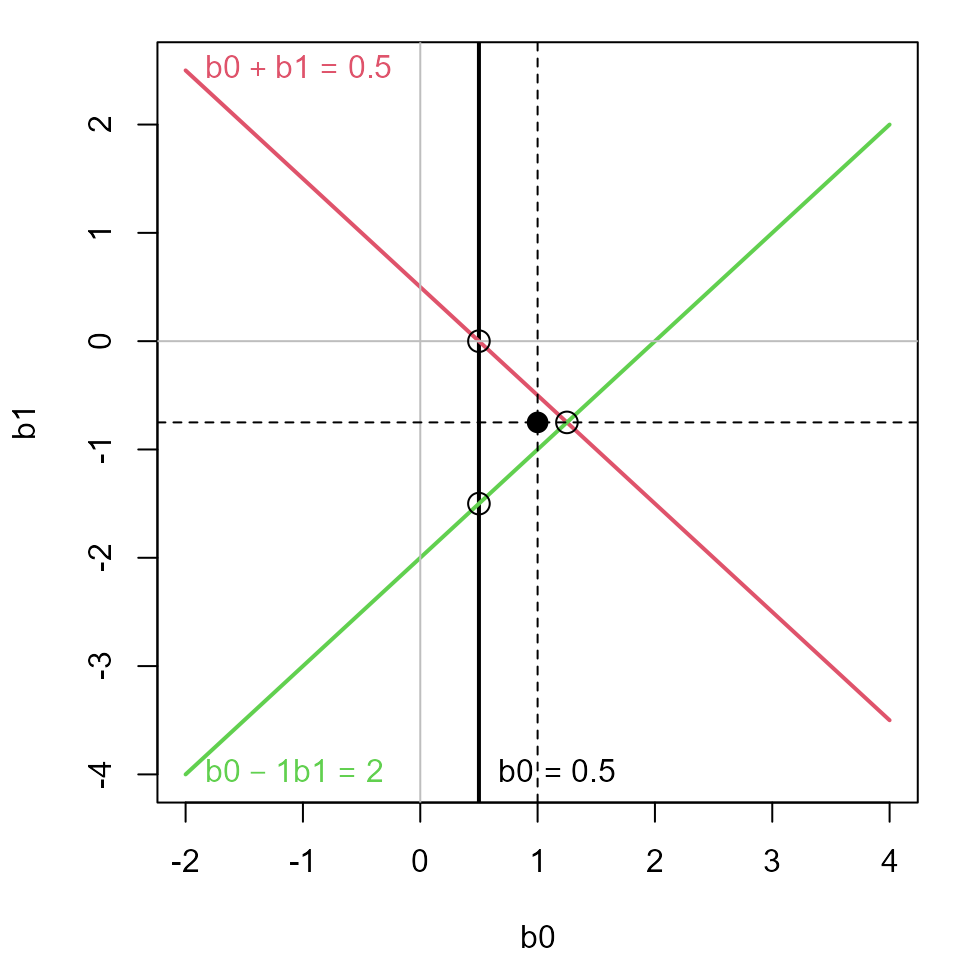

For comparison, we can also show the least squares solution in data or space. Here it is in linear equation () space.

X <- cbind(x0, x1)

plotEqn(X, y, vars=c("b0", "b1"), xlim=c(-2, 4))

## b0 = 0.5

## b0 + b1 = 0.5

## b0 - 1*b1 = 2

solution <- lm( y ~ 0 + X)

loc <- coef(solution)

points(x=loc[1], y=loc[2], pch=16, cex=1.5)

abline(v=loc[1], lty=2)

abline(h=loc[2], lty=2)

References

Dempster, A. P. (1969). Elements of Continuous Multivariate Analysis, Addison-Wesley,

Fox, J. (1984). Linear Statistical Models and Related Methods. NY: John Wiley and Sons.

Friendly, M.; Monette, G. & Fox, J. (2013). Elliptical Insights: Understanding Statistical Methods Through Elliptical Geometry Statistical Science, 28, 1-39.

Monette, G. (1990). “Geometry of Multiple Regression and Interactive 3-D Graphics” In: Fox, J. & Long, S. (Eds.) Modern Methods of Data Analysis, SAGE Publications, 209-256